The Covid-19 pandemic has necessitated some of the biggest changes in working practices for financial participants in decades. Firms have been forced to adapt to remote working for what might be the first time, as travel restrictions and social distancing have made usual working practices difficult. This has greatly increased the need for technology solutions that can keep operations running smoothly and teams communicating effectively from any location.

Among other things, the need to work remotely has greatly accelerated the trend towards digital transformation in the capital markets. Where firms already recognise that investing in ways to extract more value from their data is important, the need to develop new digital tools to facilitate and manage a distributed workforce has transformed this into a critical priority.

This, however, has created a new set of challenges. Many firms that do now realise there is an urgent need for digital tools such as data analytics, simply haven’t yet built the data foundations necessary to make these tools available and effective.

Take, for example, artificial intelligence (AI). For many, AI in the financial markets has underdelivered. Just a few short years ago, vendors and institutions alike were predicting a tidal wave of transformation in the markets as AI began helping firms to do everything from speeding up compliance to managing low-touch sales relationships.

This enthusiasm was not misplaced – artificial intelligence, as well as other forms of algorithm-driven analytics, have an important role to play in the future of financial market operations – however, the reason that many of these programmes are taking longer to deliver is that they have not yet been built on the right data foundations. To quote Google’s Research Director, Peter Norvig: “More data beats clever algorithms, but better data beats more data.”

No matter its purpose, all analytics programmes require fundamental data foundations in order to be effective. The very fabric of a firm’s data must be integrated and used in a way that is frictionless, for it to be truly valuable. As such, data sets must be harmonised and standardised into one consistent format and cover as wide a set of relevant transaction and market data as possible. Furthermore, each data entry should be as comprehensive as possible, with all relevant fields captured for every entry.

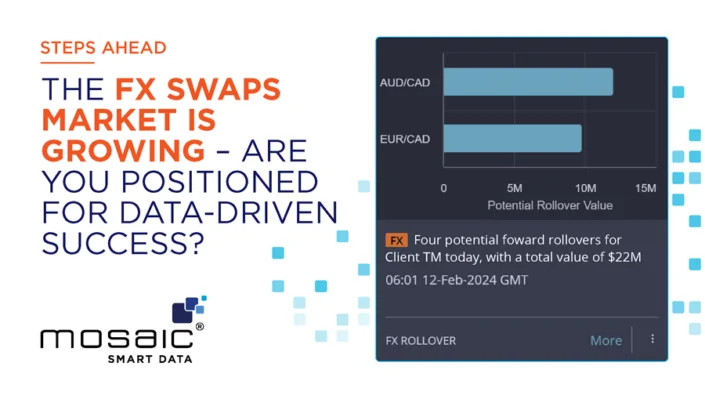

This may sound obvious, however, achieving such a unified, cleansed and enriched data set in the financial markets is often far from straightforward. Within market-facing firms, trades are being executed across myriad electronic trading venues including bilateral liquidity streams and by traditional over-the-counter protocols (i.e. voice). Within the FICC markets, each trading network adheres to its own messaging language for passing and recording trades and there will often be wide variation in the fields captured for a given trade. To add to the complexity, data which firms bring in from external sources will have been processed in a way which is unique to that data provider and cannot simply be added to this new unified data set.

In my previous roles as Head of Trading, not having a clear idea of what transaction data we were producing across all our global locations and across all of our trading channels with our clients was a significant disadvantage. The inability to transform it into one standardised format to deliver a coherent view of what was really happening in the fixed income and FX markets was a barrier to our growth and profitability. Knowing how important this normalised data stream is, was one of the reasons I founded Mosaic Smart Data. As such, the company now offers the ability to facilitate best-in-class data streams at both the micro and macro levels, providing an invaluable asset to institutions, enabling users to understand their activity in a comprehensive way and enabling them to deliver a more tailored service to their customers.